First author publications

2023

On the dice loss variants and sub-patching

H Kervadec, M de Bruijne

Midl 2023, short paper track

Show abstract

·

PDF

The soft-Dice loss is a very popular loss for image semantic segmentation in the medical field, and is often combined with the cross-entropy loss. It has recently been shown that the gradient of the dice loss is a “negative” of the ground truth, and its supervision can be trivially mimicked by multiplying the predicted probabilities with a pre-computed “gradient-map” (Kervadec and de Bruijne, 2023). In this short paper, we study the properties of the dice loss, and two of its variants (Milletari et al., 2016; Sudre et al., 2017) when sub-patching is required, and no foreground is present. As theory and experiments show, this introduce divisions by zero which are difficult to handle gracefully while maintaining good performances. On the contrary, the mime loss of (Kervadec and de Bruijne, 2023) proved to be far more suited for sub-patching and handling of empty patches.

On the dice loss gradient and the ways to mimic it

H Kervadec, M de Bruijne

arXiv preprint

Show abstract

·

PDF

In the past few years, in the context of fully-supervised semantic segmentation, several losses -- such as cross-entropy and dice -- have emerged as de facto standards to supervise neural networks. The Dice loss is an interesting case, as it comes from the relaxation of the popular Dice coefficient; one of the main evaluation metric in medical imaging applications. In this paper, we first study theoretically the gradient of the dice loss, showing that concretely it is a weighted negative of the ground truth, with a very small dynamic range. This enables us, in the second part of this paper, to mimic the supervision of the dice loss, through a simple element-wise multiplication of the network output with a negative of the ground truth. This rather surprising result sheds light on the practical supervision performed by the dice loss during gradient descent. This can help the practitioner to understand and interpret results while guiding researchers when designing new losses.

2022

Constrained Deep Networks: Lagrangian Optimization via Log-Barrier Extensions Oral

H Kervadec, J Dolz, J Yuan, C Desrosiers, E Granger, I Ben Ayed

Eusipco 2022

Show abstract

·

PDF

·

Code

This study investigates imposing hard inequality constraints on the outputs of convolutional neural networks (CNN) during training. Several recent works showed that the theoretical and practical advantages of Lagrangian optimization over simple penalties do not materialize in practice when dealing with modern CNNs involving millions of parameters. Therefore, constrained CNNs are typically handled with penalties. We propose log-barrier extensions, which approximate Lagrangian optimization of constrained-CNN problems with a sequence of unconstrained losses. Unlike standard interior-point and log-barrier methods, our formulation does not need an initial feasible solution. The proposed extension yields an upper bound on the duality gap---generalizing the result of standard log-barriers---and yielding sub-optimality certificates for feasible solutions. While sub-optimality is not guaranteed for non-convex problems, this result shows that log-barrier extensions are a principled way to approximate Lagrangian optimization for constrained CNNs via implicit dual variables. We report weakly supervised image segmentation experiments, with various constraints, showing that our formulation outperforms substantially the existing constrained-CNN methods, in terms of accuracy, constraint satisfaction and training stability, more so when dealing with a large number of constraints.

2021

Beyond pixel-wise supervision: semantic segmentation with higher-order shape descriptors Oral Best paper award

H Kervadec, H Bahig, L Letourneau-Guillon, J Dolz, I Ben Ayed

Medical Imaging with Deep Learning (

Midl)

Show abstract

·

PDF

·

OpenReview

·

Code

·

Talk

Standard losses for training deep segmentation networks could be seen as individual classifications of pixels, instead of supervising the global shape of the predicted segmentations. While effective, they require exact knowledge of the label of each pixel in an image.

This study investigates how effective global geometric shape descriptors could be, when used on their own as segmentation losses for training deep networks. Not only interesting theoretically, there exist deeper motivations to posing segmentation problems as a reconstruction of shape descriptors: First, annotations to obtain approximations of low-order shape moments could be much less cumbersome than their full-mask counterparts, and anatomical priors could be readily encoded into invariant shape descriptions, which might alleviate the annotation burden. Also, some shape descriptors could be readily used to ``encode'' biomarkers, leading to better interpretability. Finally, and most importantly, we hypothesize that, given a task, certain shape descriptions might be invariant across image acquisition protocols/modalities and subject populations, which might open interesting research avenues for generalization in medical image segmentation.

We introduce and formulate a few shape descriptors in the context of deep segmentation, and evaluate their potential as stand-alone losses on two different, challenging tasks. Inspired by recent works in constrained optimization for deep networks, we propose a way to use those descriptors to supervise segmentation, without any pixel-level label. Very surprisingly, as little as 4 descriptors values per class can approach the performance of a segmentation mask with 65k individual discrete labels. We also found that shape descriptors can be a valid way to encode anatomical priors about the task, enabling to leverage expert knowledge without requiring additional annotations. Our implementation is publicly available and can be easily extended: https://github.com/hkervadec/shape_descriptors.

2020

Boundary loss for highly unbalanced segmentation

H Kervadec, J Bouchtiba, C Desrosiers, É Granger, J Dolz, I Ben Ayed

Medical Image Analysis (MedIA) 67.

Journal extension of the conference paper.

Show abstract

·

PDF

·

Code

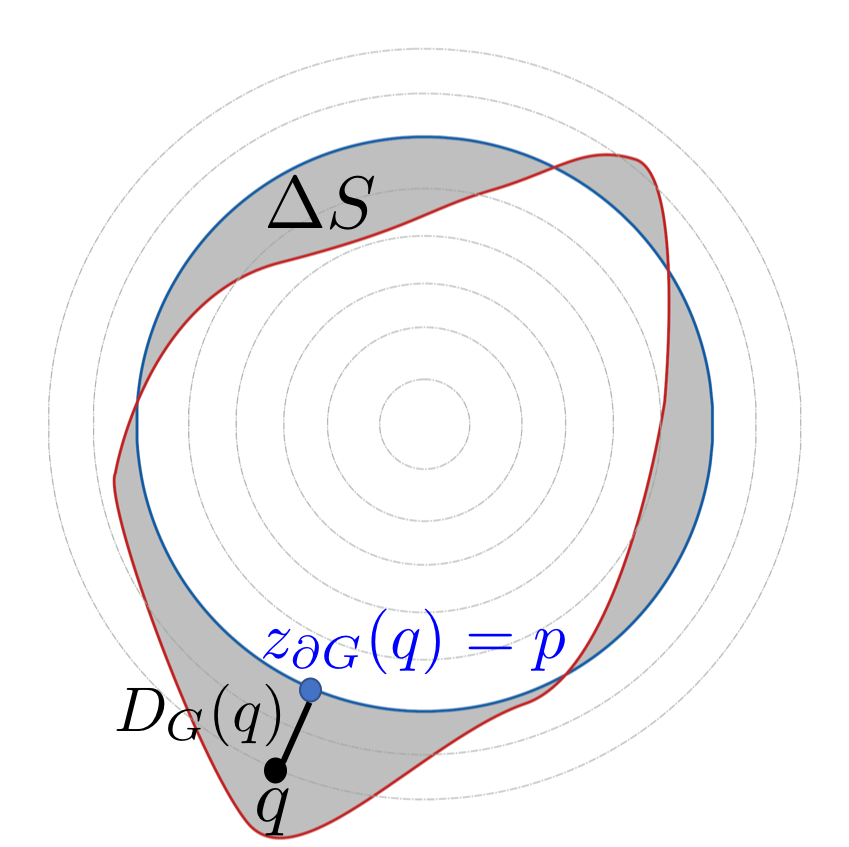

Widely used loss functions for CNN segmentation, e.g., Dice or cross-entropy, are based on integrals over the segmentation regions. Unfortunately, for

highly unbalanced segmentations, such regional summations have values that differ by several orders of magnitude across classes, which affects training performance and stability. We propose a boundary loss, which takes the form of a distance metric on the space of contours, not regions. This can mitigate the difficulties

of highly unbalanced problems because it uses integrals over the interface between regions instead of unbalanced integrals over the regions. Furthermore, a boundary loss complements regional information.ptimization techniques for computing active-contour flows,

we express a non-symmetric $L_2$ distance on the space of contours as a regional integral, which avoids completely local differential computations involving contour points. This yields a boundary loss expressed with the regional softmax probability outputs of the network, which can be easily combined with standard regional losses and implemented with any existing deep network architecture

for N-D segmentation. We report comprehensive evaluations and comparisons on different unbalanced problems, showing that our boundary loss can yield significant increases in performances while improving training stability. Our code is publicly available.

Bounding boxes for weakly supervised segmentation: Global constraints get close to full supervision Oral

H Kervadec, J Dolz, S Wang, E Granger, I Ben Ayed

Medical Imaging with Deep Learning (

Midl)

Show abstract

·

PDF

·

OpenReview

·

Code

·

Talk

We propose a novel weakly supervised learning segmentation based on several global constraints derived from box annotations. Particularly, we leverage a classical tightness prior to a deep learning setting via imposing a set of constraints on the network outputs. Such a powerful topological prior prevents solutions from excessive shrinking by enforcing any horizontal or vertical line within the bounding box to contain, at least, one pixel of the foreground region. Furthermore, we integrate our deep tightness prior with a global background emptiness constraint, guiding training with information outside the bounding box. We demonstrate experimentally that such a global constraint is much more powerful than standard cross-entropy for the background class. Our optimization problem is challenging as it takes the form of a large set of inequality constraints on the outputs of deep networks. We solve it with sequence of unconstrained losses based on a recent powerful extension of the log-barrier method, which is well-known in the context of interior-point methods. This accommodates standard stochastic gradient descent (SGD) for training deep networks, while avoiding computationally expensive and unstable Lagrangian dual steps and projections. Extensive experiments over two different public data sets and applications (prostate and brain lesions) demonstrate that the synergy between our global tightness and emptiness priors yield very competitive performances, approaching full supervision and outperforming significantly DeepCut. Furthermore, our approach removes the need for computationally expensive proposal generation. Our code is shared anonymously.

2019

Curriculum semi-supervised segmentation

H Kervadec, J Dolz, E Granger, I Ben Ayed

Medical Image Computing and Computer Assisted Intervention (

Miccai)

Show abstract

·

PDF

·

Code

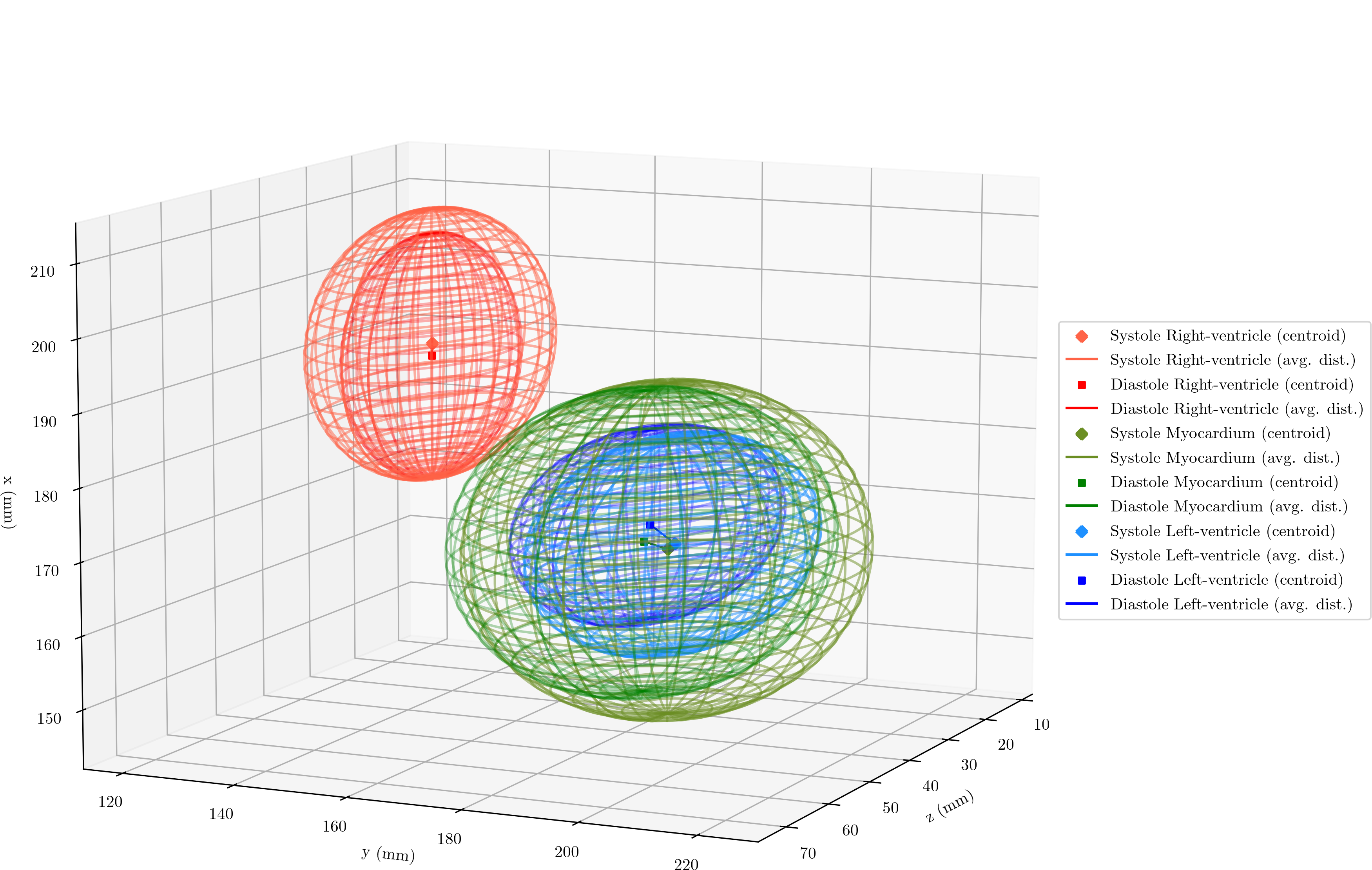

This study investigates a curriculum-style strategy for semi-supervised CNN segmentation, which devises a regression network to learn image-level information such as

the size of the target region. These regressions are used to effectively regularize the segmentation network, constraining the softmax predictions of the unlabeled images

to match the inferred label distributions. Our framework is based on inequality constraints, which tolerate uncertainties in the inferred knowledge, e.g., regressed region size. It

can be used for a large variety of region attributes. We evaluated our approach for left ventricle segmentation in magnetic resonance images (MRI), and compared

it to standard proposal-based semi-supervision strategies. Our method achieves competitive results, leveraging unlabeled data in a more efficient manner and approaching full-supervision performance.

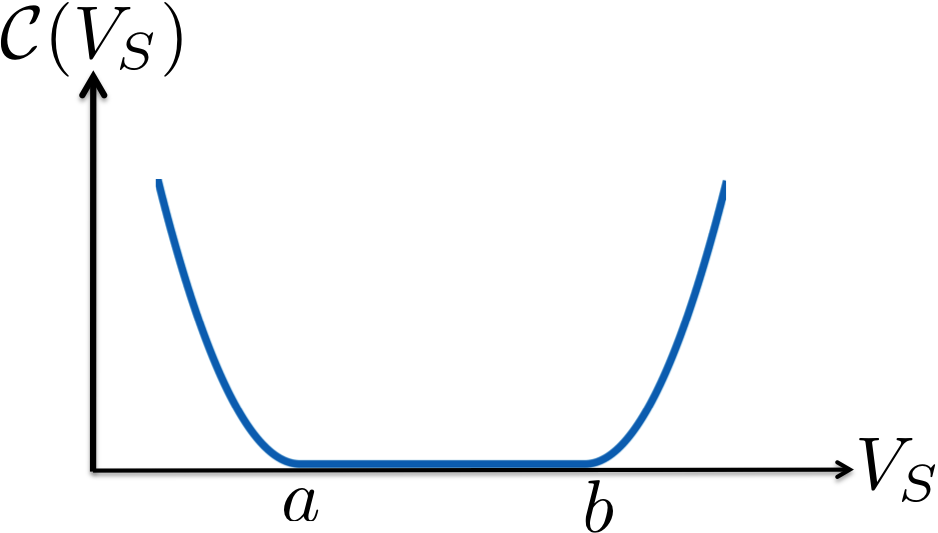

Constrained-CNN losses for weakly supervised segmentation

H Kervadec, J Dolz, M Tang, E Granger, Y Boykov, I Ben Ayed

Medical Image Analysis (MedIA) 54.

Journal extension of the conference paper.

Show abstract

·

PDF

·

Code

Weakly-supervised learning based on, e.g., partially labelled images or image-tags, is currently attracting significant attention in CNN segmentation as it can mitigate the need for full and laborious pixel/voxel annotations.

Enforcing high-order (global) inequality constraints on the network output (for instance, to constrain the size of the target region) can leverage unlabeled data, guiding the training process with domain-specific knowledge.

Inequality constraints are very flexible because they do not assume exact prior knowledge. However, constrained Lagrangian dual optimization has been largely avoided in deep networks, mainly for computational tractability reasons. To the best of our knowledge, the method of [Pathak et al. 2015] is the only prior work that addresses deep CNNs with linear constraints in weakly supervised segmentation. It uses the constraints to synthesize fully-labeled training masks (proposals) from weak labels, mimicking full supervision and facilitating dual optimization.

We propose to introduce a differentiable penalty, which enforces inequality constraints directly in the loss function, avoiding expensive Lagrangian dual iterates and proposal generation. From constrained-optimization perspective, our simple penalty-based approach is not optimal as there is no guarantee that the constraints are satisfied. However, surprisingly, it yields substantially better results than the Lagrangian-based constrained CNNs in [Pathak et al. 2015], while reducing the computational demand for training.

By annotating only a small fraction of the pixels, the proposed approach can reach a level of segmentation performance that is comparable to full supervision on three separate tasks.

While our experiments focused on basic linear constraints such as the target-region size and image tags, our framework can be easily extended to other non-linear constraints, e.g., invariant shape moments and other region statistics. Therefore, it has the potential to close the gap between weakly and fully supervised learning in semantic medical image segmentation. Our code is publicly available.

Boundary loss for highly unbalanced segmentation Oral Runner-up for best paper award

H Kervadec, J Bouchtiba, C Desrosiers, É Granger, J Dolz, I Ben Ayed

Medical Imaging with Deep Learning (

Midl).

A journal extension was published in Medical Image Analysis (2020).

Show abstract

·

PDF

·

OpenReview

·

Code

·

Talk

Widely used loss functions for convolutional neural network (CNN) segmentation, e.g., Dice or cross-entropy, are based on integrals (summations) over the segmentation regions. Unfortunately, for highly unbalanced segmentations, such regional losses have values that differ considerably -- typically of several orders of magnitude -- across segmentation classes, which may affect training performance and stability. We propose a boundary loss, which takes the form of a distance metric on the space of contours (or shapes), not regions. This can mitigate the difficulties of regional losses in the context of highly unbalanced segmentation problems because it uses integrals over the boundary (interface) between regions instead of unbalanced integrals over regions. Furthermore, a boundary loss provides information that is complimentary to regional losses. Unfortunately, it is not straightforward to represent the boundary points corresponding to the regional softmax outputs of a CNN. Our boundary loss is inspired by discrete (graph-based) optimization techniques for computing gradient flows of curve evolution. Following an integral approach for computing boundary variations, we express a non-symmetric $L_2$ distance on the space of shapes as a regional integral, which avoids completely local differential computations involving contour points. This yields a boundary loss expressed with the regional softmax probability outputs of the network, which can be easily combined with standard regional losses and implemented with any existing deep network architecture for N-D segmentation. We report comprehensive evaluations on two benchmark datasets corresponding to difficult, highly unbalanced problems: the ischemic stroke lesion (ISLES) and white matter hyperintensities (WMH). Used in conjunction with the region-based generalized Dice loss (GDL), our boundary loss improves performance significantly compared to GDL alone, reaching up to 8% improvement in Dice score and 10% improvement in Hausdorff score. It also yielded a more stable learning process. Our code is publicly available.

2018

Constrained-CNN losses for weakly supervised segmentation Oral CIFAR student travel award

H Kervadec, J Dolz, M Tang, E Granger, Y Boykov, I Ben Ayed

Medical Imaging with Deep Learning (

Midl).

A journal extension was published in Medical Image Analysis (2019).

Show abstract

·

PDF

·

OpenReview

·

Code

·

Talk

Weak supervision, e.g., in the form of partial labels or image tags, is currently attracting significant attention in CNN segmentation as it can mitigate the lack of full and laborious pixel/voxel annotations, a common problem in medical imaging. Embedding high-order (global) inequality constraints on the network output, for instance, on the size of the target region, can leverage unlabeled data, guiding training with domain-specific knowledge. Inequality constraints are very flexible because they do not assume exact prior knowledge. However, constrained Lagrangian optimization has been largely avoided in deep networks, mainly for computational tractability reasons. To the best of our knowledge, the method of Pathak et al. is the only prior work that addresses constrained deep CNNs in weakly supervised segmentation. It uses the constraints to synthesize fully-labeled training masks (proposals) from weak labels, mimicking full supervision and facilitating dual optimization.

We propose to introduce a differentiable term, which enforces inequality constraints directly in the loss function, avoiding expensive Lagrangian dual iterates and proposal generation. From constrained-optimization perspective, our simple approach is not optimal as there is no guarantee that the constraints are satisfied. However, surprisingly, it yields substantially better results than the proposal-based constrained CNNs in Pathak et al., while reducing the computational demand for training. In the context of cardiac image segmentation, we reached a segmentation performance close to full supervision while using a fraction of the ground-truth labels 0.1% of the pixels of the ground-truth masks) and image-level tags. Our framework can be easily extended to other inequality constraints, e.g., shape moments or region statistics. Therefore, it has the potential to close the gap between weakly and fully supervised learning in semantic medical image segmentation. Our code is publicly available.

2014

Polystyrene: The decentralized data shape that never dies

S Bouget,

H Kervadec, AM Kermarrec, F Taïani

International Conference on Distributed Computing Systems

Show abstract

·

PDF

Decentralized topology construction protocols organize nodes along a predefined topology (e.g. a torus, ring, orhypercube). Such topologies have been used in many contexts ranging from routing and storage systems, to publish-subscribe and event dissemination. Since most topologies assume no correlation between the physical location of nodes and their positions in the topology, they do not handle catastrophic failures well,in which a whole region of the topology disappears. When this occurs, the overall shapeof the system typically gets lost. This is highly problematic in applications in which overlay nodes are used to map a virtual data space, be it for routing, indexing or storage. In this paper, we propose a novel decentralized approach that maintains the initial shape of the topology even if a large (consecutive) portion of the topology fails. Our approach relieson the dynamic decoupling between physical nodes and virtual ones enabling a fast reshaping. For instance, our results show that a 51,200-node torus converges back to a full torus in only 10 rounds after 50% of the nodes have crashed. Our protocol is both simple and flexible and provides a novel form of collective survivability that goes beyond the current state of the art.

Collaborations

2023

Nested star-shaped objects segmentation using diameter annotations

R Camarasa,

H Kervadec, M. E Kooi, J Hendrikse, P Nederkoorn, D Bos and M de Bruijne

Medical Image Analysis

Journal extension of the Midl 2022 paper.

Show abstract

·

Code

Most current deep learning based approaches for image segmentation require annotations of large datasets, which limits their application in clinical practice. We observe a mismatch between the voxelwise ground-truth that is required to optimize an objective at a voxel level and the commonly used, less time-consuming clinical annotations seeking to characterize the most important information about the patient (diameters, counts, etc.). In this study, we propose to bridge this gap for the case of multiple nested star-shaped objects (e.g., a blood vessel lumen and its outer wall) by optimizing a deep learning model based on diameter annotations. This is achieved by extracting in a differentiable manner the boundary points of the objects at training time, and by using this extraction during the backpropagation. We evaluate the proposed approach on segmentation of the carotid artery lumen and wall from multisequence MR images, thus reducing the annotation burden to only four annotated landmarks required to measure the diameters in the direction of the vessel’s maximum narrowing. Our experiments show that training based on diameter annotations produces state-of-the-art weakly supervised segmentations and performs reasonably compared to full supervision. We made our code publicly available at https://gitlab.com/radiology/aim/carotid-artery-image-analysis/nested-star-shaped-objects

2022

Source-free domain adaptation for image segmentation

M Bateson,

H Kervadec, J Dolz, H Lombaert, I Ben Ayed

Medical Image Analysis

Journal extension of the Miccai 2020 paper.

Show abstract

·

PDF ·

Code

Domain adaptation (DA) has drawn high interest for its capacity to adapt a model trained on labeled source data to perform well on unlabeled or weakly labeled target data from a different domain. Most common DA techniques require concurrent access to the input images of both the source and target domains. However, in practice, privacy concerns often impede the availability of source images in the adaptation phase. This is a very frequent DA scenario in medical imaging, where, for instance, the source and target images could come from different clinical sites. We introduce a source-free domain adaptation for image segmentation. Our formulation is based on minimizing a label-free entropy loss defined over target-domain data, which we further guide with weak labels of the target samples and a domain-invariant prior on the segmentation regions. Many priors can be derived from anatomical information. Here, a class-ratio prior is estimated from anatomical knowledge and integrated in the form of a Kullback–Leibler (KL) divergence in our overall loss function. Furthermore, we motivate our overall loss with an interesting link to maximizing the mutual information between the target images and their label predictions. We show the effectiveness of our prior-aware entropy minimization in a variety of domain-adaptation scenarios, with different modalities and applications, including spine, prostate and cardiac segmentation. Our method yields comparable results to several state-of-the-art adaptation techniques, despite having access to much less information, as the source images are entirely absent in our adaptation phase. Our straightforward adaptation strategy uses only one network, contrary to popular adversarial techniques, which are not applicable to a source-free DA setting. Our framework can be readily used in a breadth of segmentation problems, and our code is publicly available: https://github.com/mathilde-b/SFDA.

Differentiable Boundary Point Extraction for Weakly Supervised Star-shaped Object Segmentation Oral Runner-up for best paper award

R Camarasa,

H Kervadec, D Bos, M de Bruijne

Medical Imaging with Deep Learning (

Midl)

Show abstract

·

Code

Although Deep Learning is the new gold standard in medical image segmentation, the annotation burden limits its expansion to clinical practice. We also observe a mismatch between annotations required by deep learning methods designed with pixel-wise optimization in mind and clinically relevant annotations designed for biomarkers extraction (diameters, counts, etc.). Our study proposes a first step toward bridging this gap, optimizing vessel segmentation based on its diameter annotations. To do so we propose to extract boundary points from a star-shaped segmentation in a differentiable manner. This differentiable extraction allows reducing annotation burden as instead of the pixel-wise segmentation only the two annotated points required for diameter measurement are used for training the model. Our experiments show that training based on diameter is efficient; produces state-of-the-art weakly supervised segmentation; and performs reasonably compared to full supervision.

2021

Few-Shot Segmentation Without Meta-Learning: A Good Transductive Inference Is All You Need?

M Boudiaf,

H Kervadec, Z I Masud, J Dolz, I Ben Ayed

Conference on Computer Vision and Pattern Recognition (CVPR)

Show abstract

·

Pre-print

·

Code

Few-shot segmentation has recently attracted substantial interest, with the popular meta-learning paradigm widely dominating the literature. We show that the way inference is performed for a given few-shot segmentation task has a substantial effect on performances, an aspect that has been overlooked in the literature. We introduce a transductive inference, which leverages the statistics of the unlabeled pixels of a task by optimizing a new loss containing three complementary terms: (i) a standard cross-entropy on the labeled pixels; (ii) the entropy of posteriors on the unlabeled query pixels; and (iii) a global KL-divergence regularizer based on the proportion of the predicted foreground region. Our inference uses a simple linear classifier of the extracted features, has a computational load comparable to inductive inference and can be used on top of any base training. Using standard cross-entropy training on the base classes, our inference yields highly competitive performances on well-known few-shot segmentation benchmarks. On PASCAL-5i, it brings about 5% improvement over the best performing state-of-the-art method in the 5-shot scenario, while being on par in the 1-shot setting. Even more surprisingly, this gap widens as the number of support samples increases, reaching up to $6\%$ in the 10-shot scenario. Furthermore, we introduce a more realistic setting with domain shift, where the base and novel classes are drawn from different data sets. In this setting, we found that our method achieves the best performances.

Constrained domain adaptation for segmentation

M Bateson, J Dolz,

H Kervadec, H Lombaert, I Ben Ayed

Transaction on Medical Imaging.

Journal extension of the conference paper.

Show abstract

·

PDF

·

Code

Domain Adaption tasks have recently attracted substantial attention in computer vision as theyimprove the transferability of deep network models from a source to a target domain with differentcharacteristics. A large body of state-of-the-art domain-adaptation methods was developed for imageclassification purposes, which may be inadequate for segmentation tasks. We propose to adapt segmen-tation networks with a constrained formulation, which embeds domain-invariant prior knowledge aboutthe segmentation regions. Such knowledge may take the form of anatomical information, for instance,structure size or shape, which can be known a priori or learned from the source samples via an auxiliarytask. Our general formulation imposes inequality constraints on the network predictions of unlabeledor weakly labeled target samples, thereby matching implicitly the prediction statistics of the target andsource domains, with permitted uncertainty of prior knowledge. Furthermore, our inequality constraintseasily integrate weak annotations of the target data, such as image-level tags. We address the ensuingconstrained optimization problem with differentiable penalties, fully suited for conventional stochasticgradient descent approaches. Unlike common two-step adversarial training, our formulation is based on asingle segmentation network, which simplifies adaptation, while improving training quality. Comparisonwith state-of-the-art adaptation methods reveals considerably better performance of our model on twochallenging tasks. Particularly, it consistently yields a performance gain of 1-4% Dice across architecturesand datasets. Our results also show robustness to imprecision in the prior knowledge. The versatility ofour novel approach can be readily used in various segmentation problems, with code available publicly.

2020

Laplacian pyramid-based complex neural network learning for fast MR imaging

H Liang, Y Gong,

H Kervadec, J Yuan, H Zheng, S Wang

Medical Imaging with Deep Learning (

Midl)

Show abstract

·

PDF

·

Talk

A Laplacian pyramid-based complex neural network, CLP-Net, is proposed to reconstruct high-quality magnetic resonance images from undersampled k-space data. Specifically, three major contributions have been made: 1) A new framework has been proposed to explore the encouraging multi-scale properties of Laplacian pyramid decomposition; 2) A cascaded multi-scale network architecture with complex convolutions has been designed under the proposed framework; 3) Experimental validations on an open source dataset fastMRI demonstrate the encouraging properties of the proposed method in preserving image edges and fine textures.

Source-Relaxed Domain Adaptation for Image Segmentation

M Bateson,

H Kervadec, J Dolz, H Lombaert, I Ben Ayed

Medical Image Computing and Computer Assisted Intervention (

Miccai)

A journal extension was published in Medical Image Analysis (2022).

Show abstract

·

PDF

Domain adaptation (DA) has drawn high interests for its capacity to adapt a model trained on labeled source data to perform well on unlabeled or weakly labeled target data from a different domain. Most common DA techniques require the concurrent access to the input images of both the source and target domains. However, in practice, it is common that the source images are not available in the adaptation phase. This is a very frequent DA scenario in medical imaging, for instance, when the source and target images come from different clinical sites. We propose a novel formulation for adapting segmentation networks, which relaxes such a constraint. Our formulation is based on minimizing a label-free entropy loss defined over target-domain data, which we further guide with a domain invariant prior on the segmentation regions. Many priors can be used, derived from anatomical information. Here, a class-ratio prior is learned via an auxiliary network and integrated in the form of a Kullback-Leibler (KL) divergence in our overall loss function. We show the effectiveness of our prior-aware entropy minimization in adapting spine segmentation across different MRI modalities. Our method yields comparable results to several state-of-the-art adaptation techniques, even though is has access to less information, the source images being absent in the adaptation phase. Our straight-forward adaptation strategy only uses one network, contrary to popular adversarial techniques, which cannot perform without the presence of the source images. Our framework can be readily used with various priors and segmentation problems.

Discretely-constrained deep network for weakly supervised segmentation

J Peng,

H Kervadec, J Dolz, I Ben Ayed, M Pedersoli, C Desrosiers

Neural networks

Show abstract

·

PDF

An efficient strategy for weakly-supervised segmentation is to impose constraints or regularization priors on target regions. Recent efforts have focused on incorporating such constraints in the training of convolutional neural networks (CNN), however this has so far been done within a continuous optimization framework. Yet, various segmentation constraints and regularization can be modeled and optimized more efficiently in a discrete formulation. This paper proposes a method, based on the alternating direction method of multipliers (ADMM) algorithm, to train a CNN with discrete constraints and regularization priors. This method is applied to the segmentation of medical images with weak annotations, where both size constraints and boundary length regularization are enforced. Experiments on a benchmark cardiac segmentation dataset show our method to yield a performance near to full supervision.

2019

Constrained domain adaptation for segmentation

M Bateson, J Dolz,

H Kervadec, H Lombaert, I Ben Ayed

Medical Image Computing and Computer Assisted Intervention (

Miccai)

Show abstract

·

PDF

·

Code

We propose to adapt segmentation networks with a constrained formulation, which embeds domain-invariant prior knowledge about the segmentation regions. Such knowledge may take the form of simple anatomical information, e.g., structure size or shape, estimated from source samples or known a priori. Our method imposes domain-invariant inequality constraints on the network outputs of unlabeled target samples. It implicitly matches prediction statistics between target and source domains with permitted uncertainty of prior knowledge. We address our constrained problem with a differentiable penalty, fully suited for standard stochastic gradient descent approaches, removing the need for computationally expensive Lagrangian optimization with dual projections. Unlike current two-step adversarial training, our formulation is based on a single loss in a single network, which simplifies adaptation by avoiding extra adversarial steps, while improving convergence and quality of training.

The comparison of our approach with state-of-the-art adversarial methods reveals substantially better performance on the challenging task of adapting spine segmentation across different MRI modalities. Our results also show a robustness to imprecision of size priors, approaching the accuracy of a fully supervised model trained directly in a target domain.Our method can be readily used for various constraints and segmentation problems.